Event-Driven Architectures demystified: from Producer to Consumer – part 2

11 February 2026 - 17 min. read

Eric Villa

Solutions Architect

S3Bucket:

Type: AWS::S3::Bucket

Properties:

BucketName: 'subdomain.mydomain.com'

CorsConfiguration:

CorsRules:

- AllowedHeaders:

- '*'

AllowedMethods:

- GET

- HEAD

- POST

- PUT

- DELETE

AllowedOrigins:

- 'https://*.mydomain.com'

PublicAccessBlockConfiguration:

BlockPublicAcls: true

BlockPublicPolicy: true

IgnorePublicAcls: true

RestrictPublicBuckets: true

WebsiteConfiguration:

ErrorDocument: error.html

IndexDocument: index.html

S3BucketPolicy:

Type: AWS::S3::BucketPolicy

Properties:

Bucket: !Ref S3Bucket

PolicyDocument:

Version: '2012-10-17'

Statement:

- Sid: VPCEndpointReadGetObject

Effect: Allow

Principal: "*"

Action: s3:GetObject

Resource: !Sub '${S3Bucket.Arn}/*'

Condition:

StringEquals:

aws:sourceVpce: !Ref S3VPCEndpointId

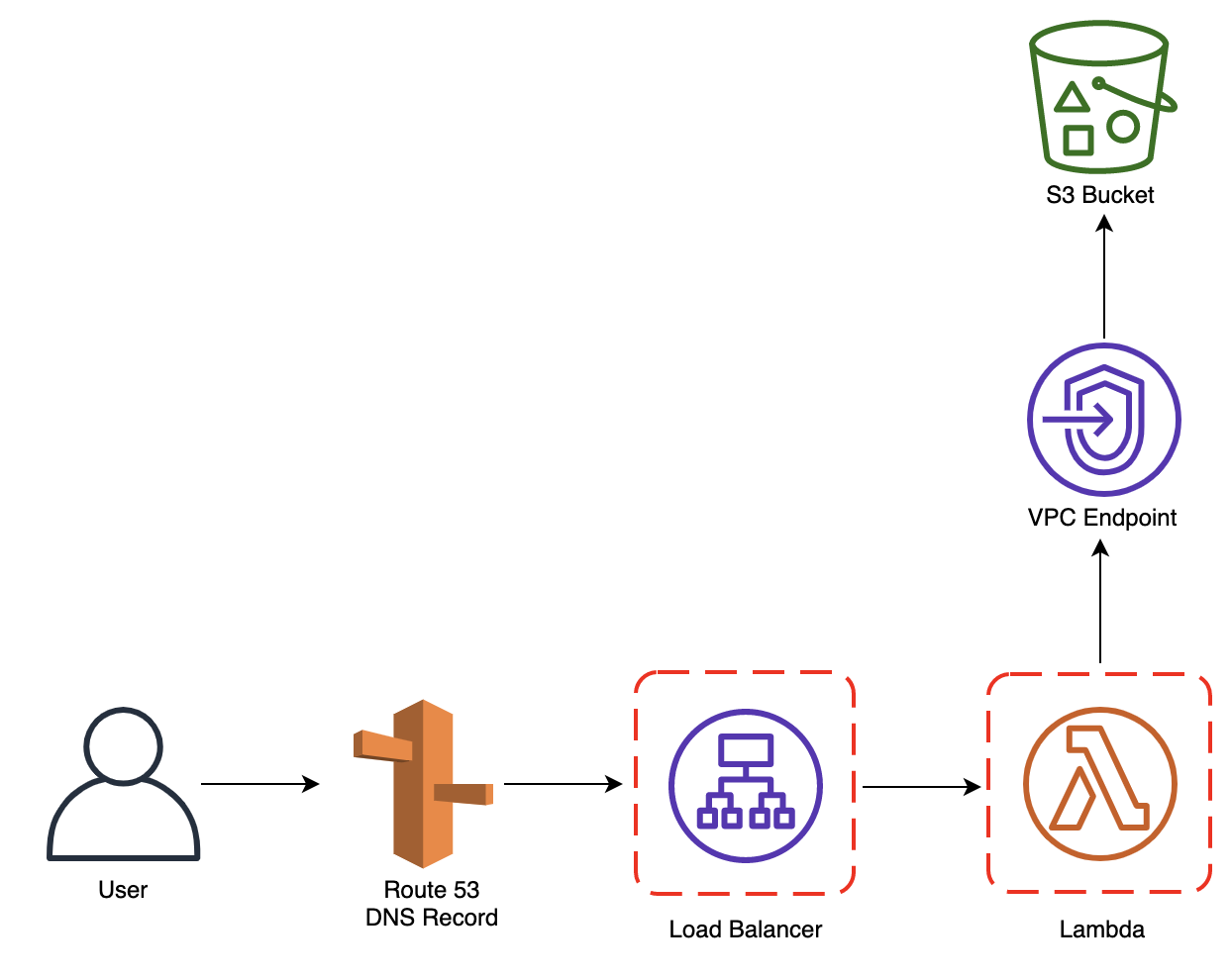

It’s worth noticing that “website configuration” has been enabled to allow HTTP requests towards the bucket but at the same time a Bucket Policy denies retrieving any kind of object from it unless a request is coming from the S3’s VPC endpoint, ensuring that only allowed actors passing from the account’s VPC can access that very bucket. LoadBalancer:

Type: AWS::ElasticLoadBalancingV2::LoadBalancer

Properties:

Name: !Sub '${ProjectName}'

LoadBalancerAttributes:

- Key: 'idle_timeout.timeout_seconds'

Value: '60'

- Key: 'routing.http2.enabled'

Value: 'true'

- Key: 'access_logs.s3.enabled'

Value: 'true'

- Key: 'access_logs.s3.prefix'

Value: loadbalancers

- Key: 'access_logs.s3.bucket'

Value: !Ref S3LogsBucketName

Scheme: internet-facing

SecurityGroups:

- !Ref LoadBalancerSecurityGroup

Subnets:

- !Ref SubnetPublicAId

- !Ref SubnetPublicBId

- !Ref SubnetPublicCId

Type: application

LoadBalancerSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupName: !Sub '${ProjectName}-alb'

GroupDescription: !Sub '${ProjectName} Load Balancer Security Group'

SecurityGroupIngress:

- CidrIp: 0.0.0.0/0

Description: ALB Ingress rule from world

FromPort: 80

ToPort: 80

IpProtocol: tcp

- CidrIp: 0.0.0.0/0

Description: ALB Ingress rule from world

FromPort: 443

ToPort: 443

IpProtocol: tcp

Tags:

- Key: Name

Value: !Sub '${ProjectName}-alb'

- Key: Environment

Value: !Ref Environment

VpcId: !Ref VPCId

HttpListener:

Type: AWS::ElasticLoadBalancingV2::Listener

Properties:

DefaultActions:

- RedirectConfig:

Port: '443'

Protocol: HTTPS

StatusCode: 'HTTP_301'

Type: redirect

LoadBalancerArn: !Ref LoadBalancer

Port: 80

Protocol: HTTP

HttpsListener:

Type: AWS::ElasticLoadBalancingV2::Listener

Properties:

Certificates:

- CertificateArn: !Ref LoadBalancerCertificateArn

DefaultActions:

- Type: forward

TargetGroupArn: !Ref TargetGroup

LoadBalancerArn: !Ref LoadBalancer

Port: 443

Protocol: HTTPS

TargetGroup:

Type: AWS::ElasticLoadBalancingV2::TargetGroup

Properties:

Name: !Sub '${ProjectName}'

HealthCheckEnabled: false

TargetType: lambda

Targets:

- Id: !GetAtt Lambda.Arn

DependsOn: LambdaPermission

Thanks to this template, a public Load Balancer is deployed with a listener on port 80 (HTTP) redirecting on port 443 (HTTPS) with another listener which contacts a Target Group with a specific Lambda function registered on it.import json

from boto3 import client as boto3_client

from os import environ as os_environ

import base64

from urllib3 import PoolManager

http = PoolManager()

s3 = boto3_client('s3')

def handler(event, context):

try:

print(event)

print(context)

host = event['headers']['host']

print("Host:", host)

feature = host.split('.')[0]

feature = "-".join(feature.split('-')[1:])

print("Feature:", feature)

path = event['path'] if event['path'] != "/" else "/index.html"

print("Path:", path)

query_string_parameters = event['queryStringParameters']

query_string_parameters = [f"{key}={value}" for key, value in event['queryStringParameters'].items()]

print("Query String Parameters:", query_string_parameters)

http_method = event["httpMethod"]

url = f"http://{os_environ['S3_BUCKET']}.s3-website-eu-west-1.amazonaws.com/{feature}{path}{'?' if [] != query_string_parameters else ''}{'&'.join(query_string_parameters)}"

print(url)

headers = event['headers']

headers.pop("host")

print("Headers:", headers)

body = event['body']

print("Body:", body)

r = http.request(http_method, url, headers=headers, body=body)

print("Response:", r)

print("Response Data:", r.data)

try:

decoded_response = base64.b64encode(r.data).decode('utf-8')

except:

decoded_response = base64.b64encode(r.data)

print("Decoded Response:", decoded_response)

print("Headers Response:", dict(r.headers))

return {

'statusCode': 200,

'body': decoded_response,

"headers": dict(r.headers),

"isBase64Encoded": True

}

except Exception as e:

print(e)

return {

'statusCode': 400

}

Despite being a little tricky to read, operations done here are quite simple: starting from the DNS name which the user exploits to reach the Load Balancer, the Lambda Function processes the request to the S3 Bucket by building the feature’s appropriate subfolder to contact. To ensure the entire process works as expected, a DNS name for each feature must be created.