Event-Driven Architectures demystified: from Producer to Consumer – part 2

11 February 2026 - 17 min. read

Eric Villa

Solutions Architect

"Live as if you were to die tomorrow. Learn as if you were to live forever"

- Mahatma Gandhi

I like learning new things, and I am lucky because a significant part of my work involves learning new technologies and evaluating how to use them to solve new and existing problems.

In the past few years, we described many connectivity options to connect our workloads running on AWS (Transit Gateways, Load balancing, Shared VPCs, PrivateLink and Endpoint Services, and VPC peering...)

Last year, VPC Lattice reached General Availability, offering a new option for us to explore. You may be wondering why we should learn how to use another service when we already have so many options to choose from... Well, let's find out!

In this article, we'll see that we needed to add another thing... Let's see what this service offers, how it differs from the other connectivity options we already know, and a simple use case.

So, without any further ado, let's dig in!

As we saw in previous articles, microservices are an architectural approach that allows developers to build and deploy software faster. However, it introduces challenges: single applications now consist of numerous individual components that can be distributed in different AWS accounts and must communicate with each other, and various technologies can be used together: Lambdas, EKS, ECS, and EC2.

We must face tasks like service discovery, traffic routing, authorization, security, and detailed metrics. There are scenarios where a strict microservice communication and authorization policy is complex to implement using "classical" network approaches.

Think about allowing communication only between two microservices in different AWS accounts, blocking every other traffic without implementing custom solutions and authentication.

VPC Lattice aims to help organizations overcome these challenges while keeping a secure and manageable approach.

It enables developers to manage microservices definition, authorization, and configuration, while administrators can manage infrastructure, network, communication policies, and governance; we'll see more in the following sections.

VPC Lattice has some key components:

vpc-lattice-svcs:RequestMethod

to filter allowed methods. For a complete list, you can have a look at the documentation.

Let's see which steps are required to set up VPC Lattice.

Let's briefly look at the roles and steps involved in setting up the communication.

As you can see, there is a certain degree of independence in managing services, so dependencies between teams and operations are reduced.

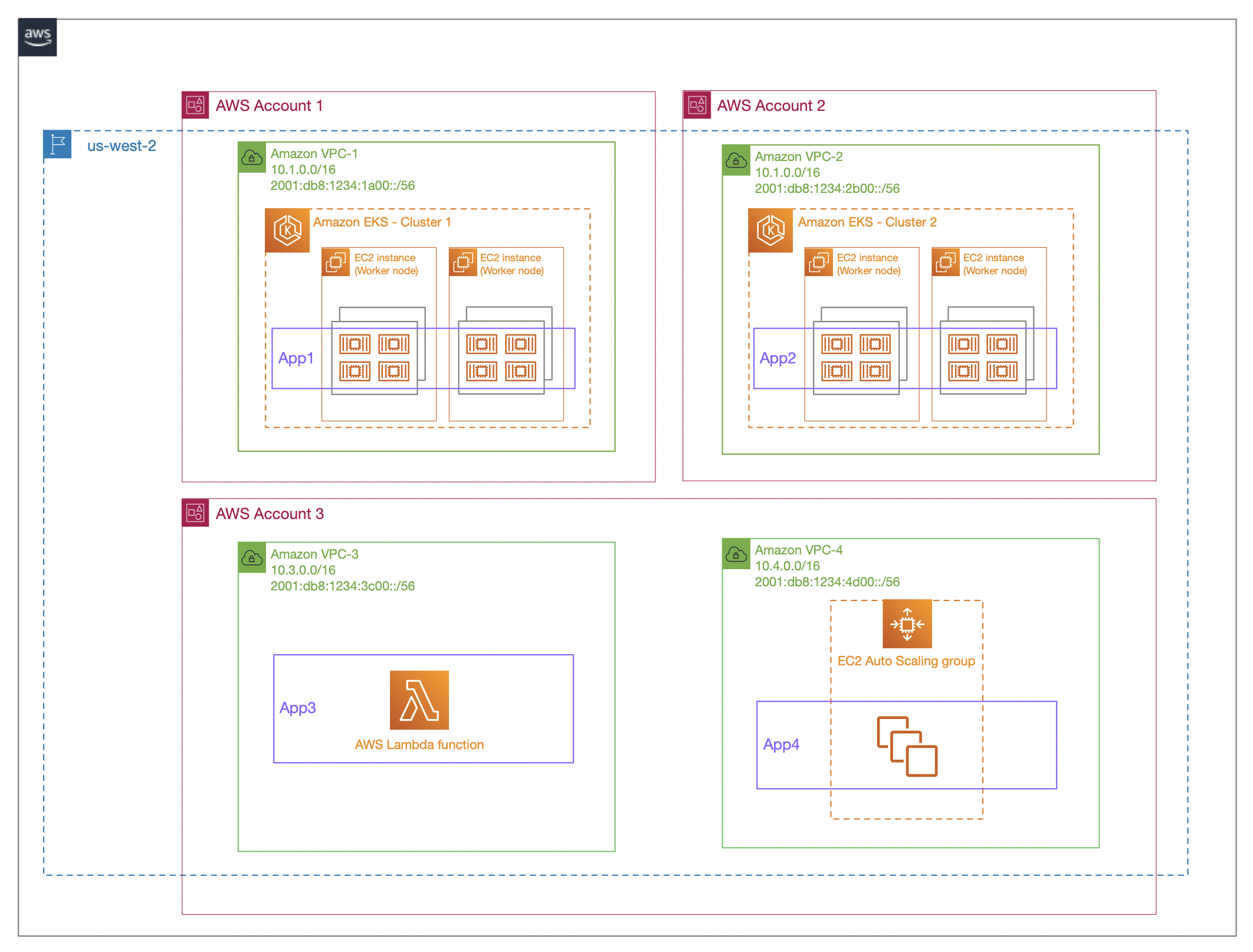

For our example, we will use the sample infrastructure and applications from this URL and analyze the key components deployed on the AWS Console.

You can deploy the reference architecture using the instructions contained in the ReadMe file in the repository.

You can also use three different VPCs belonging to a single account to ease the deployment process (as we'll do in this article).

You'll end with this sample infrastructure:

Image source: https://github.com/aws-samples/build-secure-multi-account-vpc-connnectivity-applications-with-amazon-vpc-lattice

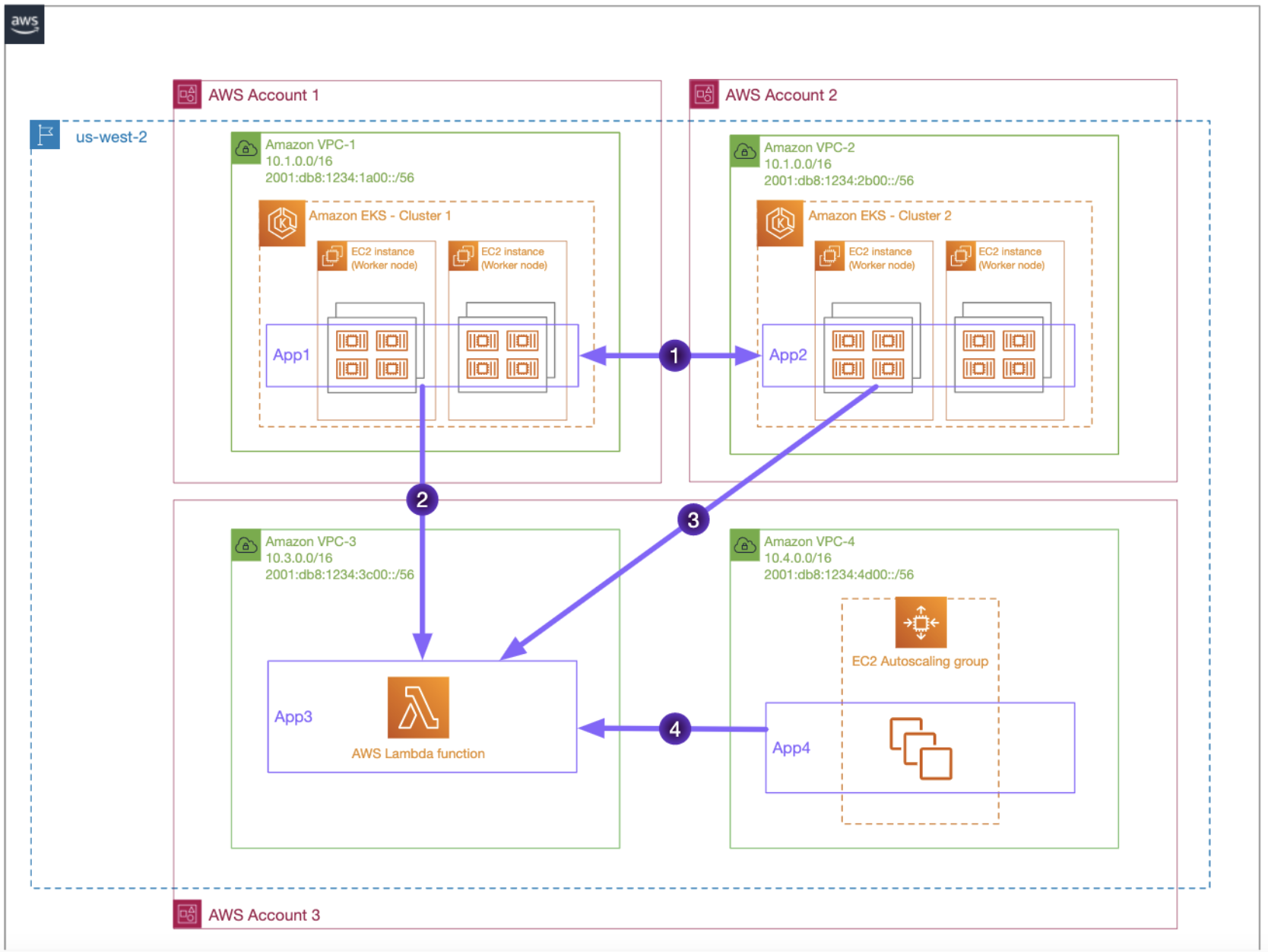

In our example, App1 and App2 will need to communicate bidirectionally, and App3 will be consumed by App1, App2, and App4.

Image source: https://github.com/aws-samples/build-secure-multi-account-vpc-connnectivity-applications-with-amazon-vpc-lattice

The deployment process will take about 10 - 15 minutes, and you will see that our microservices can communicate.

Bug Alert: there's a missing step in the deployment instructions; these two lines will make the deployment fail:

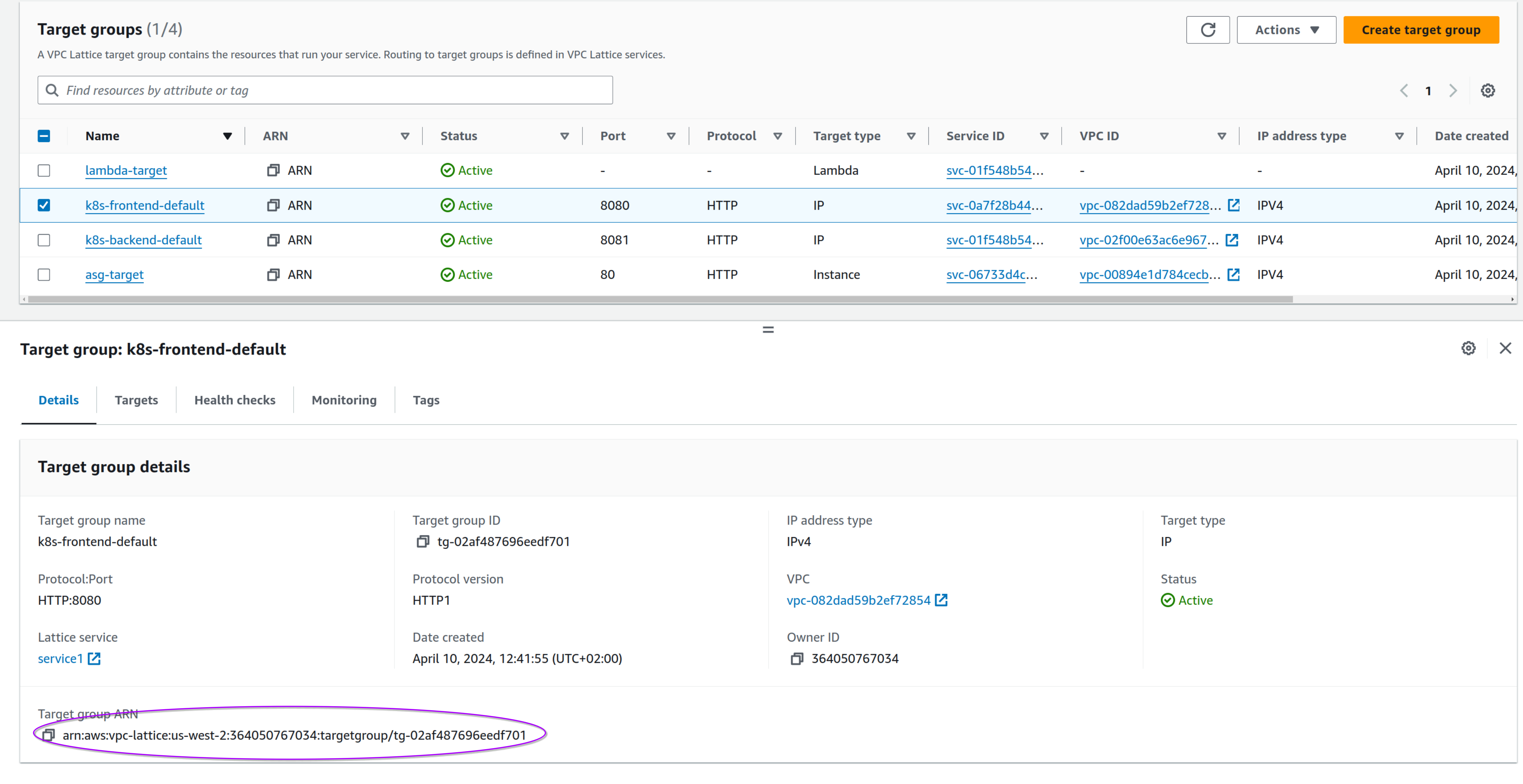

export TARGETCLUSTER1={TARGET_GROUP_ARN}

export TARGETCLUSTER2={TARGET_GROUP_ARN}

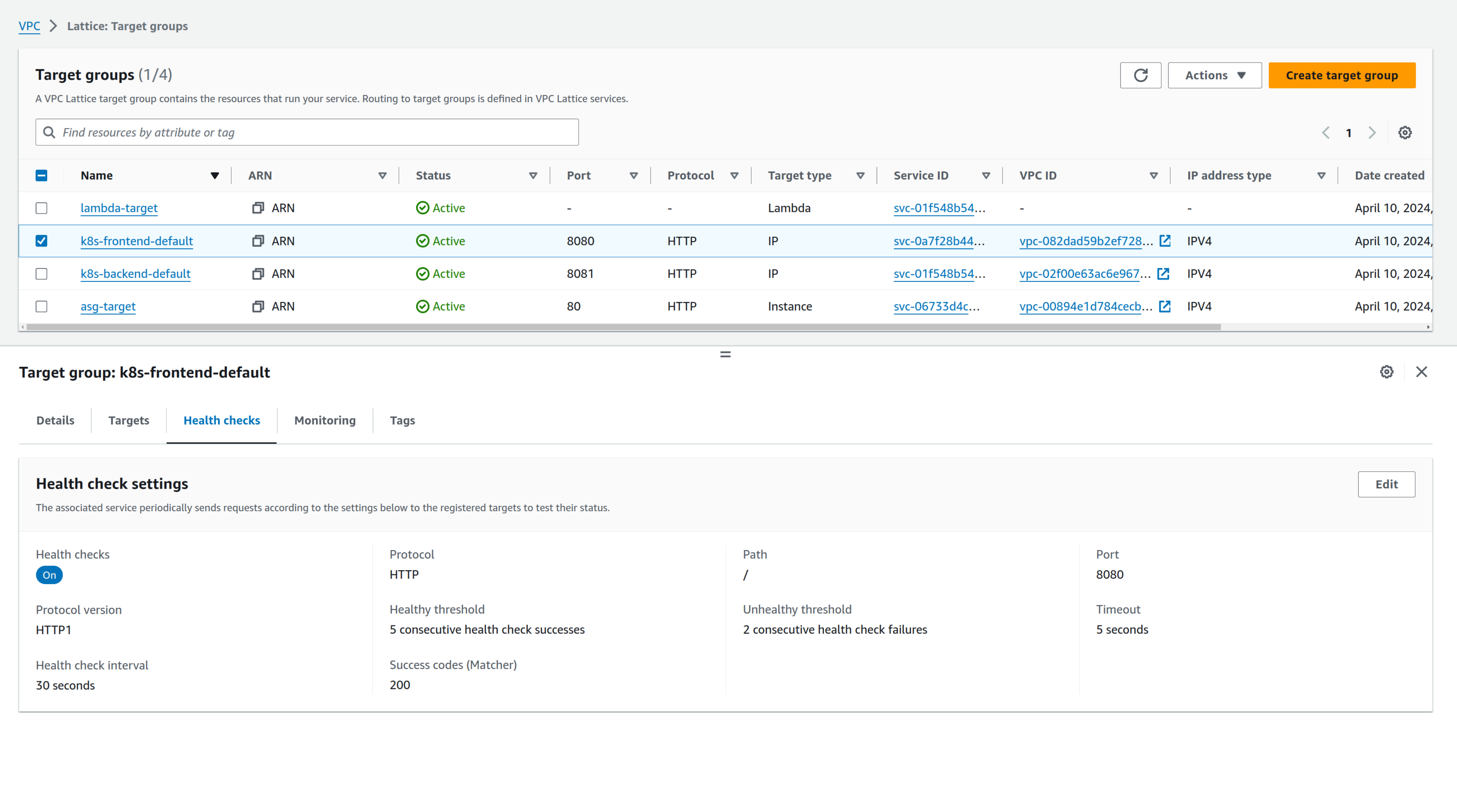

You need to change these exports using values from the VPC lattice target groups.

For TARGETCLUSTER1, use the k8s-frontend-default arn; for TARGETCLUSTER2, use the arn for k8s-backend-default. This is a sample from our deployment:

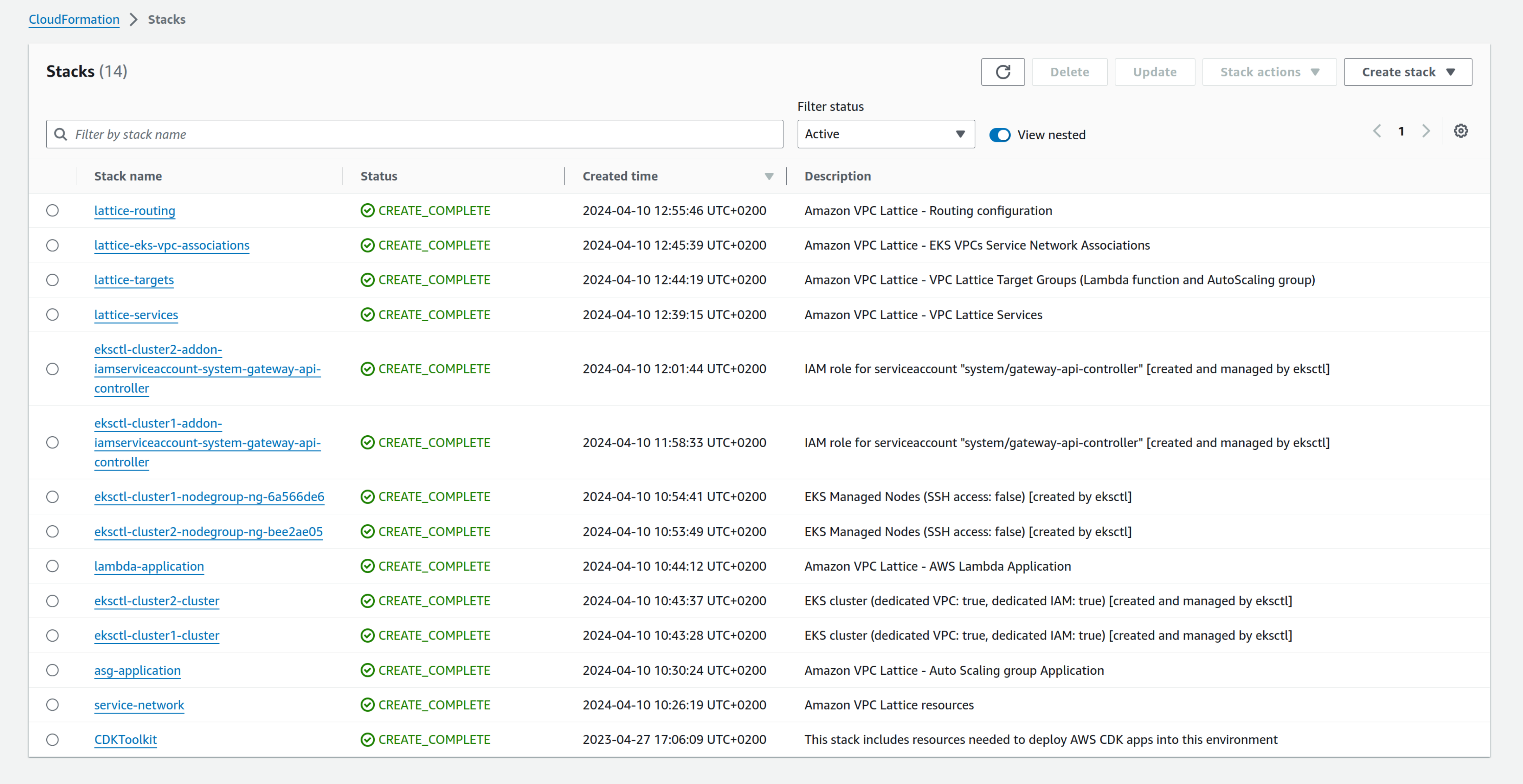

When the CloudFormation deployment finishes, you should have these CloudFormation stacks:

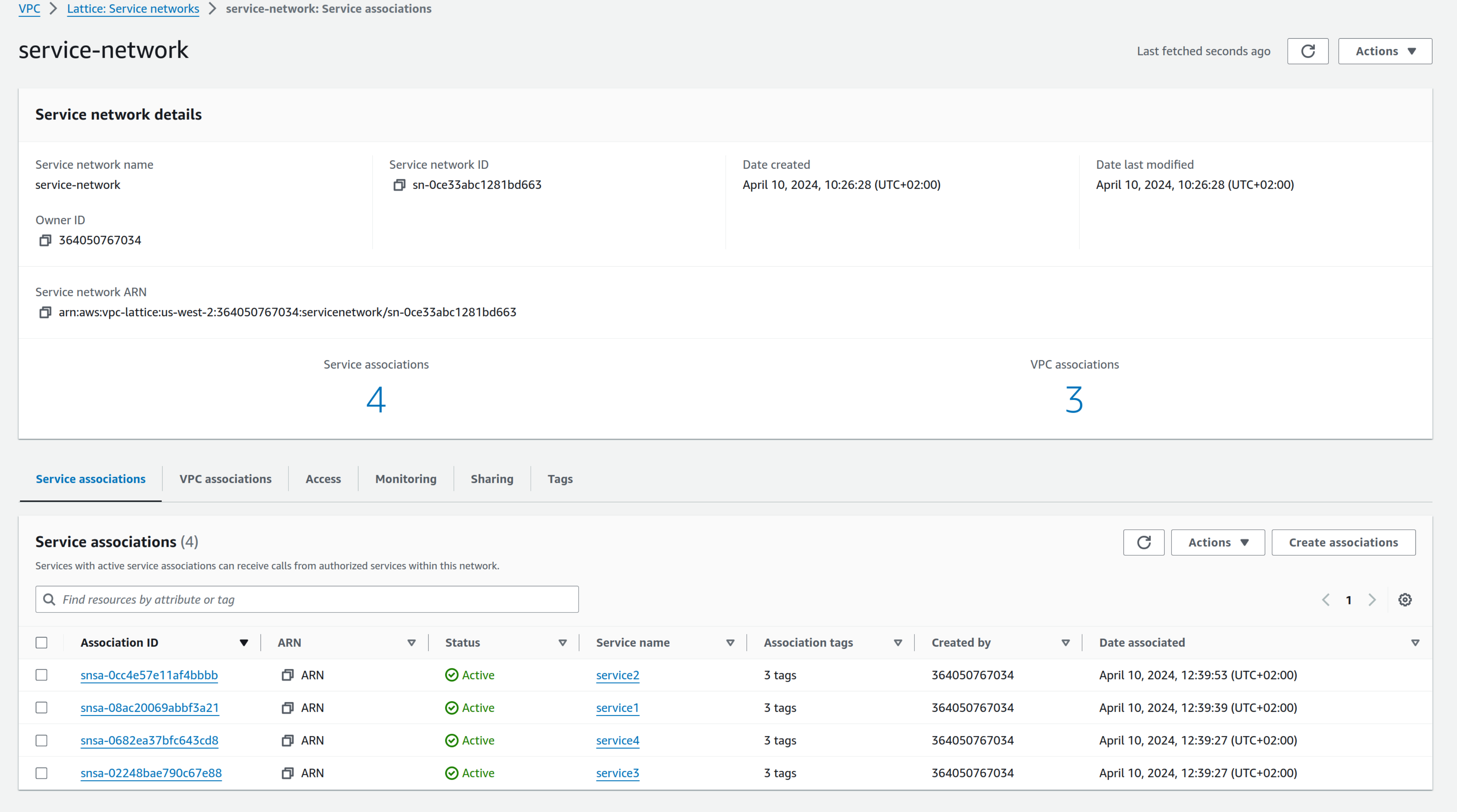

Let's see the resources created to describe them. First, the service network:

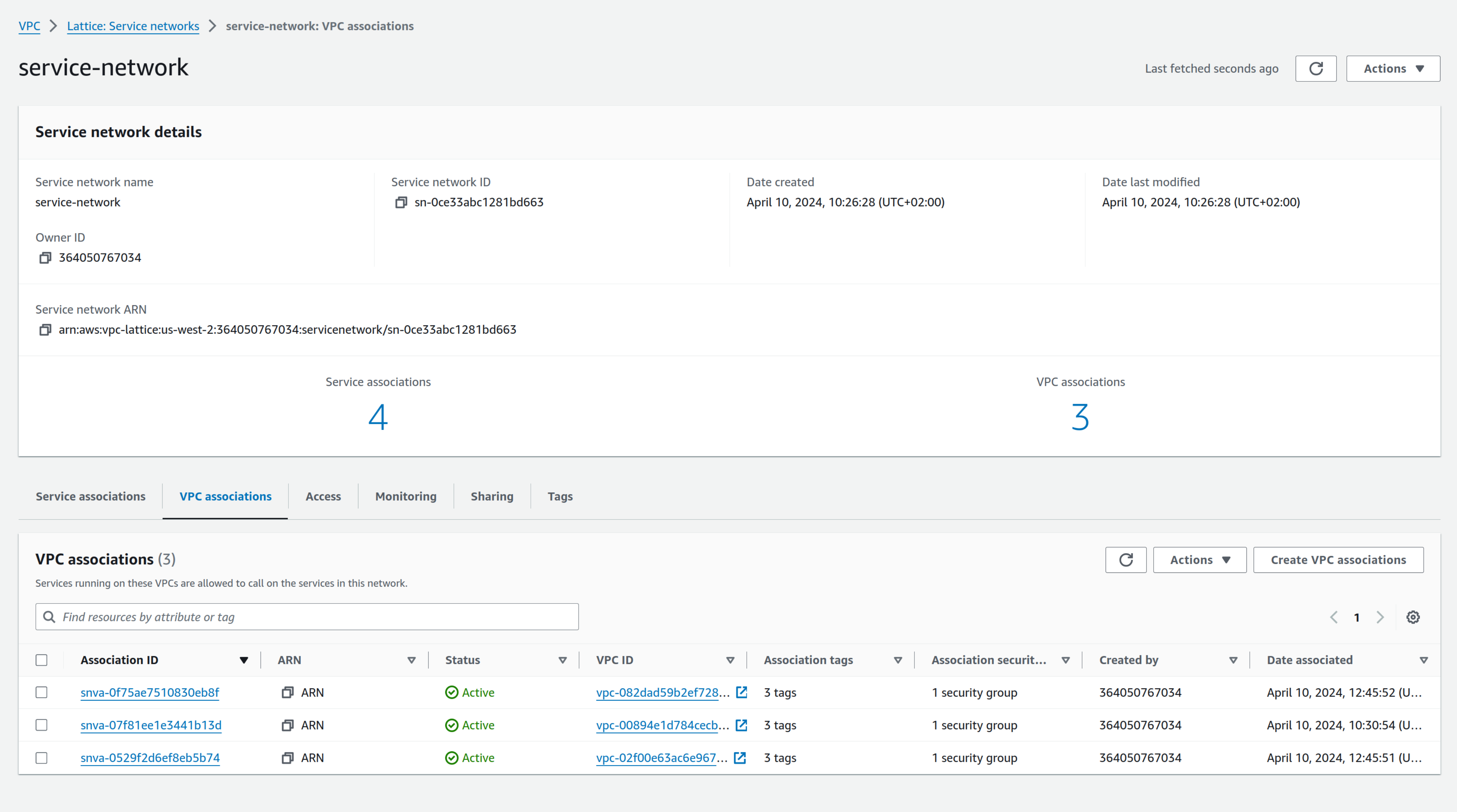

As you can see, four services are associated; you can also see VPCs associated with the service network:

Now VPC Lattice target groups:

Our Lambda, Autoscaling group, and EKS are added as targets, and like ELB target groups, you can see metrics and define health checks.

Note that to register our targets managed by EKS clusters, we had to deploy the Gateway API Controller Class into the cluster and register the service using the configuration deployed with the commands:

kubectl config use-context cluster1 kubectl apply -f ./vpc-lattice/routes/frontend-export.yaml kubectl config use-context cluster2 kubectl apply -f ./vpc-lattice/routes/backend-export.yaml

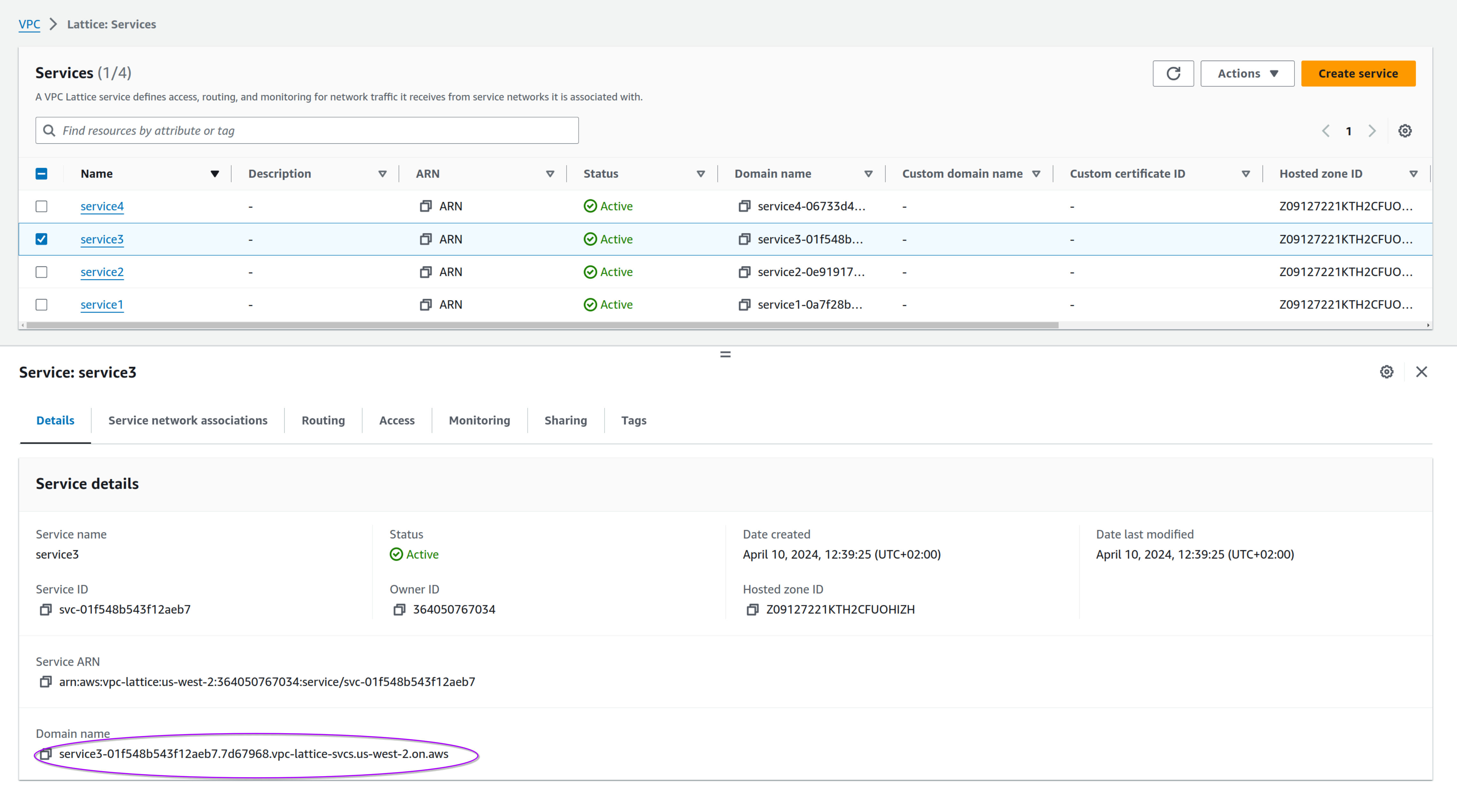

Last but not least, let's see the defined services. You can access them using the DNS domain name defined by VPC Lattice

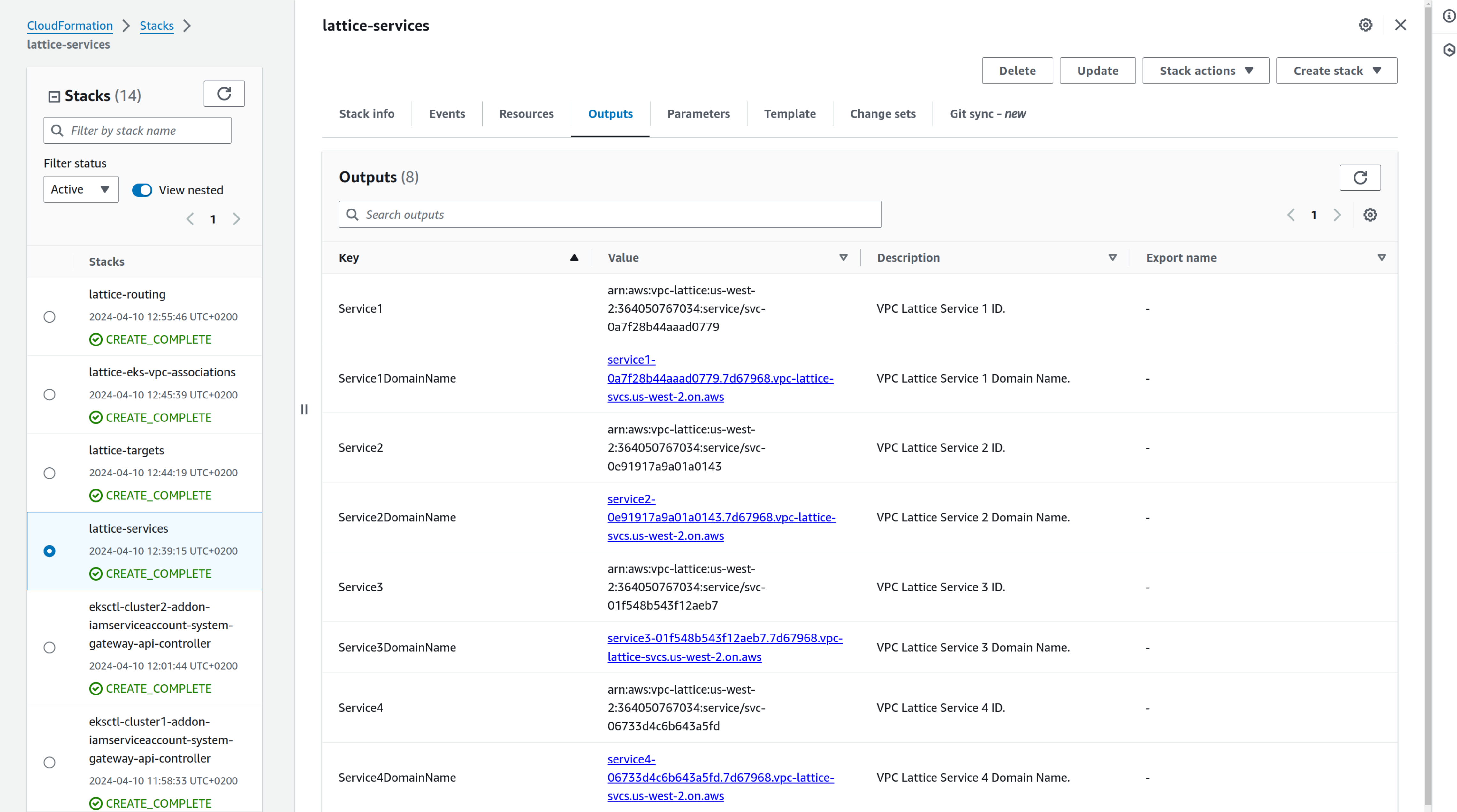

You can find the complete list of domain names defined by looking at the Outputs of the CloudFormation stack named "lattice-services":

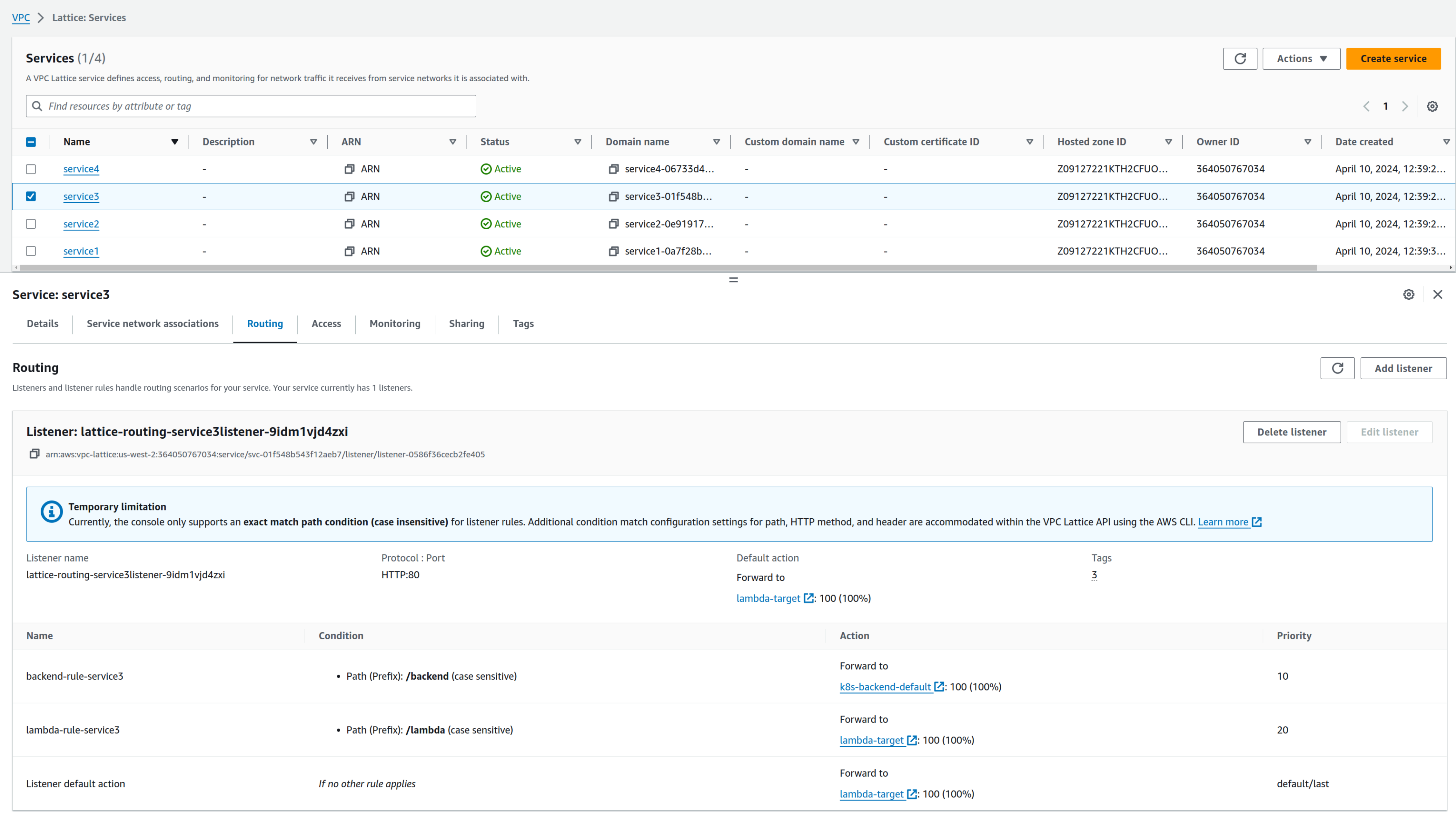

Each service also has its routing; in this case, you can see that /backend path for service3 forwards to cluster2, while /lambda forwards requests to the lambda application:

Now that you have a deployed infrastructure, feel free to experiment and modify the behaviour of your application and environment (why don't you try IAM authentication between services? ).

This is only a starting point, and you can also find an excellent AWS workshop with a good walkthrough here.

The correct answer is, as always: "It depends". Let's evaluate the limits and their impact on our design.

The first is the mandatory one-to-one association between a VPC and a Service Network. Once you define an association, you cannot make your service available to other service networks, so you need to plan the implementation carefully.

You cannot share services between different regions because a Service Network is a regional construct: you'll need to use a hybrid approach, resorting to traditional" connectivity methods, like Transit Gateway Peering and VPC Peering.

On the other hand, there are great benefits: you can free service owners to configure and offer by themselves their components while keeping centralized control in a complex environment.

We didn't have a look at IAM policies, but implementing IAM Authentication and Authorization is the key to a secure microservice implementation.

VPC Lattice also offers a complete service mesh solution because it can link EC2, EKS, ECS, and Lambda workloads.

Cost is another important factor: as your service mesh grows, costs increase proportionally.

You pay 0.025$/hour for each service. If you have 10 services you pay 182.5$/month. A different approach can have a smaller impact on AWS billing for complex architectures with hundreds of microservices.

Microservices aren't simple: they can increase the overall complexity of the architecture and require good planning and management over time.

Have you already had the chance to experiment with VPC Lattice? What are your thoughts about this new approach?

Let us know in the comments!

Proud2beCloud is a blog by beSharp, an Italian APN Premier Consulting Partner expert in designing, implementing, and managing complex Cloud infrastructures and advanced services on AWS. Before being writers, we are Cloud Experts working daily with AWS services since 2007. We are hungry readers, innovative builders, and gem-seekers. On Proud2beCloud, we regularly share our best AWS pro tips, configuration insights, in-depth news, tips&tricks, how-tos, and many other resources. Take part in the discussion!