Event-Driven Architectures demystified: from Producer to Consumer – part 2

11 February 2026 - 17 min. read

Eric Villa

Solutions Architect

AWS re:Invent 2019 is over. One week has passed since the end of the most important Cloud event of the year, and I had time to recover from jet lag, to partially fix the scary e-mail backlog which only a week-long conference can produce, and even to have some fun along the Pacific Ocean together with my beSharp mates before flying back home. So now, it seems to be a perfect moment to reorganize thoughts and share feelings about the event.

AWS re:Invent 2019 is over. One week has passed since the end of the most important Cloud event of the year, and I had time to recover from jet lag, to partially fix the scary e-mail backlog which only a week-long conference can produce, and even to have some fun along the Pacific Ocean together with my beSharp mates before flying back home. So now, it seems to be a perfect moment to reorganize thoughts and share feelings about the event. It was my 8th re:Invent in a row since the very first one back in 2012 (I'm pretty sure I'm the only one in Italy – and, I guess, one among very few people outside the US - to hold this small record). Fun fact: I'm Italian, I'm 37, and in my life, I've been twice in Venice and eight times in the "fake" Venice in the middle of Las Vegas; sounds weird. Not only to me.

It was my 8th re:Invent in a row since the very first one back in 2012 (I'm pretty sure I'm the only one in Italy – and, I guess, one among very few people outside the US - to hold this small record). Fun fact: I'm Italian, I'm 37, and in my life, I've been twice in Venice and eight times in the "fake" Venice in the middle of Las Vegas; sounds weird. Not only to me.  The hype for being there was the same as the first time, but since 2012 many things changed about AWS and the Cloud landscape in general. At that time, real Cloud users formed a sort of a small niche of pioneers struggling with the early adoption of a relatively new paradigm. Nowadays, Cloud is "the new normal," and almost everyone is talking about that. There are tons of websites, blogs, and social media users, which provided a detailed drill-down of all the new AWS services a couple of minutes after their launch during one of the keynotes. You can find useful examples here, and here.For this reason, this year, I'm not going through every single announcement, describing all the features and technical details, but I will focus more on the AWS "vision" resulting from the most – IMHO – disruptive and game-changing news coming from Vegas. So, let's start!The infrastructure part of the game is one of the most neglected while the AWS hype is more and more developer-driven by "those hipster guys who think the world ends at a REST endpoint" J (cit.). But, as you can easily guess, the innovation in this layer of the cake is the key to releasing any other kind of higher-level service.Talking about computing, two of the most exciting services are Local Zones (ultra-low-latency edge computing for metropolitan cities) and AWS Wavelength (ultra-low-latency edge computing inside telco providers' 5G networks). In my entirely personal guessing, both services are based on AWS Outpost, which was announced last year at re:Invent, but went GA on December 3rd. I had the chance to discuss extremely in depth about Outpost (being able to physically touch it for the first time at the re:Invent Expo) with Anthony Liguori, one of the Outpost project supervisors. He told me that Outpost is an exact reproduction (on a smaller scale) of the last generation EC2 and EBS infrastructure actually in production inside public AZs. This sounded quite strange to me since the Outpost rack is full of (AWS custom) 1U servers and networking devices, but there's no sign of storage devices.

The hype for being there was the same as the first time, but since 2012 many things changed about AWS and the Cloud landscape in general. At that time, real Cloud users formed a sort of a small niche of pioneers struggling with the early adoption of a relatively new paradigm. Nowadays, Cloud is "the new normal," and almost everyone is talking about that. There are tons of websites, blogs, and social media users, which provided a detailed drill-down of all the new AWS services a couple of minutes after their launch during one of the keynotes. You can find useful examples here, and here.For this reason, this year, I'm not going through every single announcement, describing all the features and technical details, but I will focus more on the AWS "vision" resulting from the most – IMHO – disruptive and game-changing news coming from Vegas. So, let's start!The infrastructure part of the game is one of the most neglected while the AWS hype is more and more developer-driven by "those hipster guys who think the world ends at a REST endpoint" J (cit.). But, as you can easily guess, the innovation in this layer of the cake is the key to releasing any other kind of higher-level service.Talking about computing, two of the most exciting services are Local Zones (ultra-low-latency edge computing for metropolitan cities) and AWS Wavelength (ultra-low-latency edge computing inside telco providers' 5G networks). In my entirely personal guessing, both services are based on AWS Outpost, which was announced last year at re:Invent, but went GA on December 3rd. I had the chance to discuss extremely in depth about Outpost (being able to physically touch it for the first time at the re:Invent Expo) with Anthony Liguori, one of the Outpost project supervisors. He told me that Outpost is an exact reproduction (on a smaller scale) of the last generation EC2 and EBS infrastructure actually in production inside public AZs. This sounded quite strange to me since the Outpost rack is full of (AWS custom) 1U servers and networking devices, but there's no sign of storage devices. The answer is surprising, while relatively easy. All the 1U servers are full-length, so just next to the motherboard, there's plenty of room for a lot of NVMe SSDs. The Nitro chip directly manages all these drives, so the same physical devices can act both as EBS or Instance (ephemeral) Storage, based on the different user requests made to AWS APIs. In the case of Instance Storage, the logical volume is exposed "locally" through the PCIe bus, while EBS is abstracted across different servers, and then exposed through Ethernet thanks to the "huge" networking bandwidth available inside Outpost. I guess a third Outpost storage abstraction based on the same hardware would be S3 (with no 11-nines durability, sorry!), which will be available in 2020. I was given no further details about storage redundancy and fault tolerance (RAID? Erasure coding?) but only informed that all the magic about that is done again by the Nitro chip. When a specific 1U server reaches a predefined failed drives threshold, the entire server is marked as "to be replaced," and the Instance Storage and EBS control plans can react accordingly. A bit unconventional, but an ingenious solution, IMHO. Just one "small" difference with public availability zones: even though AWS said many times that they're running their datacenters on custom networking hardware (powered by ASICs from Annapurna Labs), inside Outpost there are standard (but extremely powerful) Juniper networking devices. This is to maximize compatibility with customer networking devices. Talking again about computing, it seems that AWS is leveraging a lot its purchase of Annapurna Labs, since the other two major computing announcements, AWS Inferentia and AWS Graviton 2, are again about custom silicon. While Inferentia (powering the new Inf1 instance family) is "simply" a custom ML chip with an impressive performance/cost ratio, compared to standard GPU-based alternatives. The very (possibly) game-changing news is Graviton 2. And not for the quite impressive specs bump over last year's Graviton 1, the first ARM-based, custom CPU by AWS. This bump is so huge that it seems that the 6th generation of EC2 instances will be based (at least for the first time) ONLY on ARM architecture. To be honest, I doubt that this will be the end of the x86 era in the public Cloud – too many legacy workloads that will never be recompiled for ARM – but I think it's still a great, big signal. x86 architecture is 40-years-old, and despite the continuous revisions and evolutions, it brings a lot of issues and inefficiencies, mainly due to the "legacy" part of the instruction set, which is kept for compatibility reasons. Add to the recipe that there are many qualified rumors about Apple trying to abandon Intel (and x86) architecture for its laptops (another field, like the public Cloud, where the ratio performance/energy efficiency is extremely critical), replacing it with its award-winning, custom-designed ARM chips (already used in iPhones and iPads). It's a super-interesting trend, corroborated by the choices of two Silicon Valley bigs.The most "visionary" announcement of the whole re:Invent, closing our computing chapter, is by far Amazon Braket, in the hope it's not only marketing-driven competition for Quantum Supremacy against Google and IBM. Quantum computing is complex to understand in theory as difficult is to implement in the real world. For this reason, the availability of different managed quantum infrastructures (even if extremely simple and at the prototype stage) is a big opportunity for scientists, which usually has significant difficulties in finding proper "hardware" to conduct their research in this field.

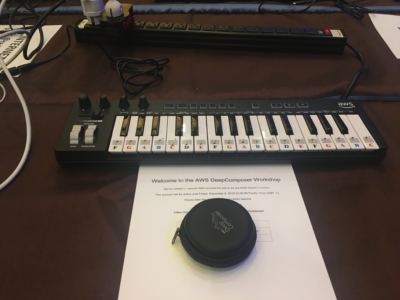

The answer is surprising, while relatively easy. All the 1U servers are full-length, so just next to the motherboard, there's plenty of room for a lot of NVMe SSDs. The Nitro chip directly manages all these drives, so the same physical devices can act both as EBS or Instance (ephemeral) Storage, based on the different user requests made to AWS APIs. In the case of Instance Storage, the logical volume is exposed "locally" through the PCIe bus, while EBS is abstracted across different servers, and then exposed through Ethernet thanks to the "huge" networking bandwidth available inside Outpost. I guess a third Outpost storage abstraction based on the same hardware would be S3 (with no 11-nines durability, sorry!), which will be available in 2020. I was given no further details about storage redundancy and fault tolerance (RAID? Erasure coding?) but only informed that all the magic about that is done again by the Nitro chip. When a specific 1U server reaches a predefined failed drives threshold, the entire server is marked as "to be replaced," and the Instance Storage and EBS control plans can react accordingly. A bit unconventional, but an ingenious solution, IMHO. Just one "small" difference with public availability zones: even though AWS said many times that they're running their datacenters on custom networking hardware (powered by ASICs from Annapurna Labs), inside Outpost there are standard (but extremely powerful) Juniper networking devices. This is to maximize compatibility with customer networking devices. Talking again about computing, it seems that AWS is leveraging a lot its purchase of Annapurna Labs, since the other two major computing announcements, AWS Inferentia and AWS Graviton 2, are again about custom silicon. While Inferentia (powering the new Inf1 instance family) is "simply" a custom ML chip with an impressive performance/cost ratio, compared to standard GPU-based alternatives. The very (possibly) game-changing news is Graviton 2. And not for the quite impressive specs bump over last year's Graviton 1, the first ARM-based, custom CPU by AWS. This bump is so huge that it seems that the 6th generation of EC2 instances will be based (at least for the first time) ONLY on ARM architecture. To be honest, I doubt that this will be the end of the x86 era in the public Cloud – too many legacy workloads that will never be recompiled for ARM – but I think it's still a great, big signal. x86 architecture is 40-years-old, and despite the continuous revisions and evolutions, it brings a lot of issues and inefficiencies, mainly due to the "legacy" part of the instruction set, which is kept for compatibility reasons. Add to the recipe that there are many qualified rumors about Apple trying to abandon Intel (and x86) architecture for its laptops (another field, like the public Cloud, where the ratio performance/energy efficiency is extremely critical), replacing it with its award-winning, custom-designed ARM chips (already used in iPhones and iPads). It's a super-interesting trend, corroborated by the choices of two Silicon Valley bigs.The most "visionary" announcement of the whole re:Invent, closing our computing chapter, is by far Amazon Braket, in the hope it's not only marketing-driven competition for Quantum Supremacy against Google and IBM. Quantum computing is complex to understand in theory as difficult is to implement in the real world. For this reason, the availability of different managed quantum infrastructures (even if extremely simple and at the prototype stage) is a big opportunity for scientists, which usually has significant difficulties in finding proper "hardware" to conduct their research in this field. An honorable mention goes to Lambda Provisioned Concurrency, finally solving the infamous cold-start issue for serverless applications (paying a little tax by exiting from a pure on-demand model), and to EKS Fargate, announced 2 years ago, but still, IMHO, the only reasonable way to run Kubernetes on AWS.On the networking side, no disruptive announcements, but a lot of interesting ones, adding new features to existing services, making them more enterprise-ready, in terms of supporting complex networking topologies (multicast support, inter-region peering and Network Manager for Transit Gateway), delivering proper performance (accelerated site-to-site VPN), and supporting third-party integrations (VPC ingress routing). With the latter, finally, very large companies will be able to fill their VPCs with security appliances and UTMs deployed between every application layer and every subnet for compliance reasons, mainly having no idea of what to do with them. Don't try this at home.Many intriguing announcements Databases, DWHs, Data Lakes, adding features (Aurora Machine Learning integration, Redshift Managed Storage), performance (RDS Proxy, AQUA for Redshift) and governance (Athena and Redshift Federated Query). Redshift, in particular, is getting an impressive number of new features and performance bumps. Another clear and direct message to Oracle customers and another episode of the battle between the different philosophies of the two IT giants: specialized databases vs. general-purpose database. Who's gonna win?The main course is obviously the AI/ML topic. THE trending topic of the last three years in the Cloud landscape, if not even in the whole IT world (forgive me, blockchain lovers!). On the AI services side, it seems that engineers in Seattle are putting AWS (and Amazon.com) AI experience in more and more different services and fields, from fraud detection to customer care, from image recognition to code analysis… among all the possible applications, indeed, the Amazon CodeGuru idea of leveraging ML models to review and profile code seems to be the most promising one. But the service is quite early-stage (only Java support for now), and it's certainly not cheap. I'm so curious to see if the performance and cost optimizations derived from the reviewing and profiling processes will balance the service cost. I just have to resurrect some old pieces of Java code to try.On the ML side, basically, it's all about putting SageMaker on steroids, adding a dedicated IDE, a collaboration environment, experiments, processing automation, model monitoring, and debugging… even automatic model generation. Some doubts about this latter claim. Which will be the compromise between ease of use, accuracy, and flexibility? Maybe you can ask some humans...DeepComposer deserves a separate discussion. (but certainly didn't deserve my 6-hours-long queue to enter the last available re:Invent workshop…sigh). I'm a keyboard player, and when Matt Wood announced DeepComposer, I was so excited thinking about myself showcasing the power of ML to our customers while playing finger-burning Dream-Theater-like synth solos in front of them. Here comes the "expectations vs. reality" meme, with me playing a one-finger version of "Twinkle twinkle little star" unsuccessfully trying to instruct the DeepComposer service on how to harmonize and arrange it. All joking aside, I think that this service has been misunderstood a lot. Let's try to clarify:

An honorable mention goes to Lambda Provisioned Concurrency, finally solving the infamous cold-start issue for serverless applications (paying a little tax by exiting from a pure on-demand model), and to EKS Fargate, announced 2 years ago, but still, IMHO, the only reasonable way to run Kubernetes on AWS.On the networking side, no disruptive announcements, but a lot of interesting ones, adding new features to existing services, making them more enterprise-ready, in terms of supporting complex networking topologies (multicast support, inter-region peering and Network Manager for Transit Gateway), delivering proper performance (accelerated site-to-site VPN), and supporting third-party integrations (VPC ingress routing). With the latter, finally, very large companies will be able to fill their VPCs with security appliances and UTMs deployed between every application layer and every subnet for compliance reasons, mainly having no idea of what to do with them. Don't try this at home.Many intriguing announcements Databases, DWHs, Data Lakes, adding features (Aurora Machine Learning integration, Redshift Managed Storage), performance (RDS Proxy, AQUA for Redshift) and governance (Athena and Redshift Federated Query). Redshift, in particular, is getting an impressive number of new features and performance bumps. Another clear and direct message to Oracle customers and another episode of the battle between the different philosophies of the two IT giants: specialized databases vs. general-purpose database. Who's gonna win?The main course is obviously the AI/ML topic. THE trending topic of the last three years in the Cloud landscape, if not even in the whole IT world (forgive me, blockchain lovers!). On the AI services side, it seems that engineers in Seattle are putting AWS (and Amazon.com) AI experience in more and more different services and fields, from fraud detection to customer care, from image recognition to code analysis… among all the possible applications, indeed, the Amazon CodeGuru idea of leveraging ML models to review and profile code seems to be the most promising one. But the service is quite early-stage (only Java support for now), and it's certainly not cheap. I'm so curious to see if the performance and cost optimizations derived from the reviewing and profiling processes will balance the service cost. I just have to resurrect some old pieces of Java code to try.On the ML side, basically, it's all about putting SageMaker on steroids, adding a dedicated IDE, a collaboration environment, experiments, processing automation, model monitoring, and debugging… even automatic model generation. Some doubts about this latter claim. Which will be the compromise between ease of use, accuracy, and flexibility? Maybe you can ask some humans...DeepComposer deserves a separate discussion. (but certainly didn't deserve my 6-hours-long queue to enter the last available re:Invent workshop…sigh). I'm a keyboard player, and when Matt Wood announced DeepComposer, I was so excited thinking about myself showcasing the power of ML to our customers while playing finger-burning Dream-Theater-like synth solos in front of them. Here comes the "expectations vs. reality" meme, with me playing a one-finger version of "Twinkle twinkle little star" unsuccessfully trying to instruct the DeepComposer service on how to harmonize and arrange it. All joking aside, I think that this service has been misunderstood a lot. Let's try to clarify: Indeed, I think that DeepComposer was launched not to be itself a production-ready service, but better to showcase the power of Generative Adversarial Networks (GANs) – the same ML technique used for Deep Fakes. A very curious note about this is that in the DeepComposer models available during the preview, the two networks are not trained using audio files, but using images! Using "piano roll" images - a 2D representation of a melody played over time - it's easier to treat audio data as it was an image, being able to reuse a lot of well-known image-related feature extraction techniques. Brilliant solution; execution to be checked. That's all for now from Las Vegas! (actually from Pavia :-) )If you want to follow-up on the discussion about re:Invent 2019, please share your comments below or contact us!

Indeed, I think that DeepComposer was launched not to be itself a production-ready service, but better to showcase the power of Generative Adversarial Networks (GANs) – the same ML technique used for Deep Fakes. A very curious note about this is that in the DeepComposer models available during the preview, the two networks are not trained using audio files, but using images! Using "piano roll" images - a 2D representation of a melody played over time - it's easier to treat audio data as it was an image, being able to reuse a lot of well-known image-related feature extraction techniques. Brilliant solution; execution to be checked. That's all for now from Las Vegas! (actually from Pavia :-) )If you want to follow-up on the discussion about re:Invent 2019, please share your comments below or contact us!